Flaky tests waste 6 to 8 hours of engineering time every week. They block CI pipelines, trigger false alarms, and force teams to rerun builds multiple times just to get a green light.

Poor software quality costs US organizations an estimated $319 billion, with testing identified as the weakest link. When teams can't trust their tests, they either waste time investigating false failures or, worse, ignore real issues that slip through.

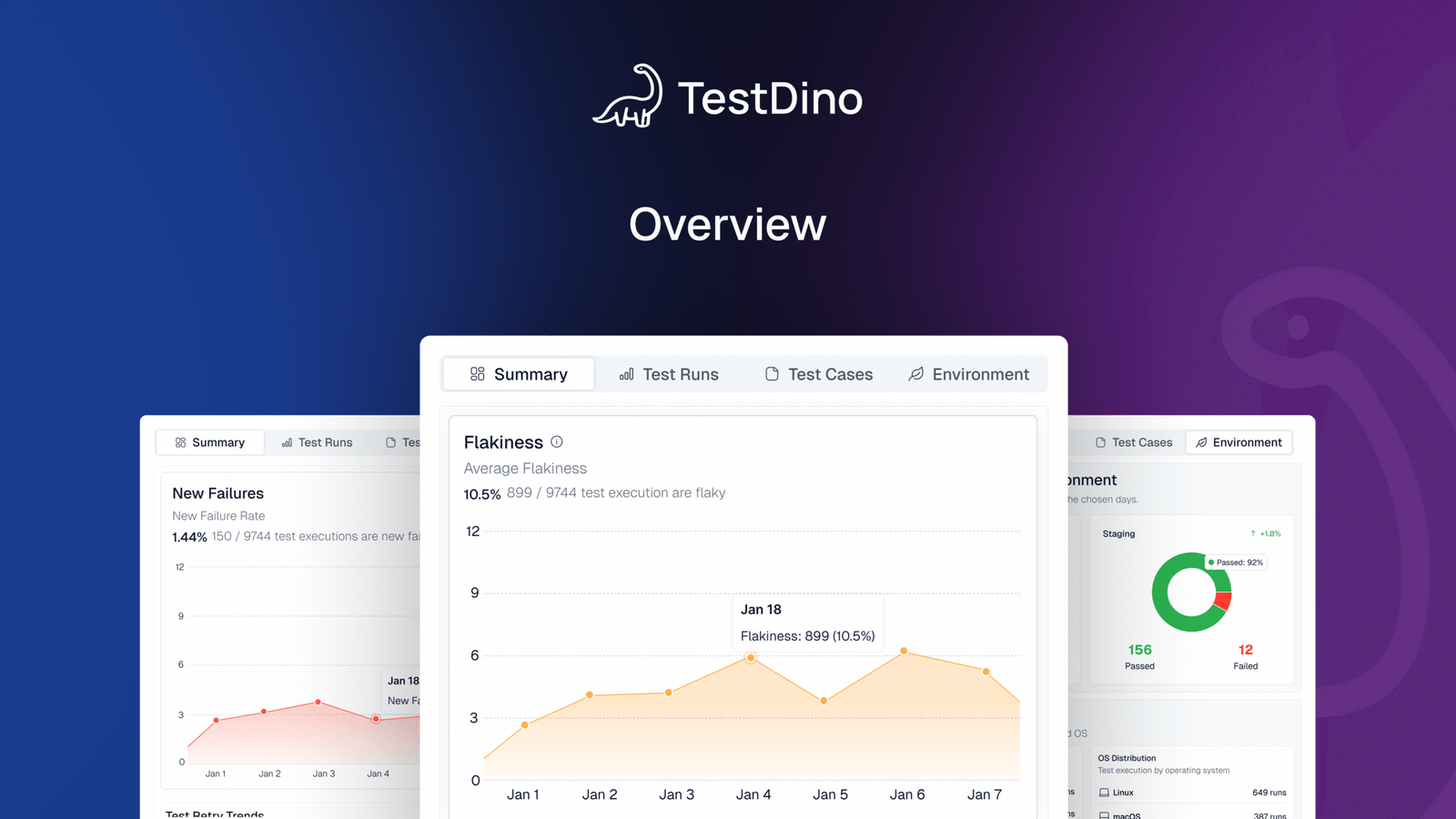

In this guide, we’ll compare the best flaky test detection tools available in 2025, highlighting which ones will help you ship faster.