Playwright Real-Time Reporting vs. Post-Execution Upload

Stop waiting for test uploads. Playwright real-time reporting streams results instantly as tests run, cutting CI overhead by 2-8 min/run and reducing debugging time by 40-60%.

Your Playwright tests finished 12 minutes ago. But the CI job? Still running. It's uploading trace files, HTML reports, and JSON artifacts to your reporting tool. Another 8 minutes of CI runners sitting idle, burning money, while your team waits to find out if anything broke.

This is how most Playwright test reporting works today. Run the tests, generate the reports, and upload everything after the suite finishes. It worked fine when you had 50 tests. But now you have 500 across 4 shards, and the upload step takes longer than you think.

Playwright real-time reporting flips this model. Instead of waiting for the full suite to finish, results stream to your reporting platform as each test completes. No bulk upload at the end. No idle CI runners. No lost data when a container crashes mid-run.

This article breaks down both architectures side by side and shows what actually changes when Playwright teams make the switch.

How post-execution reporting works in Playwright today

Most teams follow the same pattern without questioning it. Here's what happens every time your CI runs Playwright tests:

CI Runner Starts

│

▼

npx playwright test (runs full suite)

│

▼

Playwright generates JSON report + HTML report + trace files

│

▼

CI step uploads artifacts to reporting tool

│

▼

Reporting tool processes and indexes results

│

▼

CI job finally completes ← You can see results here

Every step waits for the previous one to finish. Sequential. Blocking. And the upload step scales with your suite size.

A typical setup looks like this:

import { defineConfig } from '@playwright/test';

export default defineConfig({

reporter: [

['list'],

['html', { open: 'never' }],

['json', { outputFile: 'test-results/results.json' }]

],

use: {

trace: 'on-first-retry',

screenshot: 'only-on-failure',

video: 'on-first-retry',

},

});

And in your CI workflow:

- name: Run Playwright tests

run: npx playwright test --shard=${{ matrix.shard }}

- name: Upload report artifacts

uses: actions/upload-artifact@v4

if: always()

with:

name: playwright-report-${{ strategy.job-index }}

path: |

playwright-report/

test-results/

retention-days: 7

That upload-artifact step is where things get expensive. Trace files are the biggest offender. A single test with trace: 'on' produces 5-20MB, depending on the test length and page complexity. Run 500 tests across 4 shards with tracing enabled, and you're uploading hundreds of megabytes to gigabytes per CI run.

For more details on Playwright's built-in reporter system, see our complete guide to Playwright reporting.

Where the time actually goes (CI overhead breakdown)

Let's break down a real CI job timeline. Not just test execution, but everything around it.

| Phase | Typical Duration | What's happening |

|---|---|---|

| Setup | 30–60s | Install deps, download browsers |

| Test execution | 8–15 min | Your actual Playwright tests |

| Report generation | 30–90s | JSON, HTML, traces compile |

| Artifact upload | 2–8 min | Push traces + reports to tool |

| Post-processing | 1–3 min | Tool parses and indexes |

Here's what that costs at different scales:

| Suite Size | Shards | Upload Time/Shard | Runs/Day | Monthly CI Overhead |

|---|---|---|---|---|

| 100 tests | 2 | ~2 min | 10 | ~$5.28 |

| 500 tests | 4 | ~5 min | 20 | ~$52.80 |

| 1000 tests | 8 | ~8 min | 20 | ~$168.96 |

But the money isn't even the biggest problem. The real cost is the developer’s wait time. Your engineers don't see the first failure until the entire suite finishes executing, then generates reports, and then uploads everything. If test #3 out of 500 failed with a critical auth bug, nobody knows until test #500 finishes and the upload completes.

That's 15-25 minutes of lost time before anyone can start debugging.

I've worked with teams where the upload step alone added 30% to their total CI job duration. They had slow Playwright tests, and they also had slow reporting. The tests got all the optimization attention. The reporting overhead went unnoticed.

What Playwright real-time reporting actually means

Playwright real-time reporting changes the data flow entirely. Instead of collecting everything at the end and uploading in bulk, results stream to the reporting platform as each test completes.

Here's the difference:

CI Runner Starts

│

▼

npx playwright test begins

│

├── Test #1 completes → result streams instantly

├── Test #2 completes → result streams instantly

├── Test #3 fails → failure streams instantly (you see it NOW)

├── ...

└── Test #500 completes → result streams instantly

│

▼

CI job ends ← No upload step. Done.

The reporter sends a small JSON payload for each test: name, status, duration, error message, and stack trace. Maybe 2-5KB per test. Over HTTP or Websocket. In milliseconds.

Compare that to the post-execution model, where you're uploading megabytes of trace files in a single blocking step.

The key insight is this: test metadata (pass/fail, error, duration) is tiny. Trace files are huge. Playwright real-time reporting separates the two. It sends metadata instantly and handles traces differently, either through async upload, on-demand download, or skipping them for passing tests entirely.

The practical result? Your CI job ends the moment the last test finishes. Not minutes later, after the upload completes. And you see real-time test results from the first test onward, not after the full suite wraps up.

A streaming reporter config looks conceptually like this:

import { defineConfig } from '@playwright/test';

export default defineConfig({

reporter: [

['list'],

// Streaming reporter sends results as each test completes

['@testdino/playwright', {

token: process.env.TESTDINO_TOKEN,

}]

],

use: {

trace: 'on-first-retry',

},

});

No upload-artifact step needed in your CI workflow. The reporter handles everything during test execution, not after.

Side-by-side comparison: what changes for your team

Here's a direct comparison across the dimensions that matter most to Playwright teams running CI at scale.

| Dimension | Post-Execution Upload | Playwright Real-Time Reporting |

|---|---|---|

| Time to first failure visibility | After full suite + upload completes | Seconds after test fails |

| CI job duration | Execution + generation + upload | Execution only |

| CI crash behavior | All results lost | Completed results preserved |

| Trace handling | Bulk upload (GBs) | Async or on-demand |

| CI minutes wasted | 2–10 min per run on upload | Near-zero overhead |

| Parallel shard visibility | See results after all shards finish | See each shard in real time |

| Debugging start time | Minutes to hours after failure | Immediate |

| Cost at scale (4 shards, 20 runs/day) | ~$52–168/month in upload overhead | ~$0 overhead |

Failure visibility speed. This is the single biggest change most teams feel. When a critical login test fails at test #3, you don't want to wait for tests #4 through #500 plus the upload step. With Playwright real-time reporting, the failure shows up on your dashboard within seconds. Your developer can start debugging while the rest of the suite is still running.

I've seen this cut mean-time-to-debug by 40-60% for teams doing active releases. It's the difference between catching a production blocker in 2 minutes and catching it in 25.

Crash resilience. This one doesn't get enough attention. If a CI container gets killed, whether from an out-of-memory error, a spot instance reclaim, or a timeout, the post-execution model loses everything. No report was generated, so nothing was uploaded. The entire run is a black hole.

With streaming, every completed test result is already on the reporting platform. If 400 out of 500 tests finished before the crash, you have those 400 results. You know what passed, what failed, and where to pick up. This alone is worth the switch for teams running on spot instances or resource-constrained CI.

CI cost impact. Small per-run savings compound fast. Let's say you save 5 minutes per run by removing the upload step. That's 5 minutes x 20 runs/day x 22 workdays = 2,200 minutes per month per shard. At GitHub Actions pricing, that's $17.60 per shard per month. With 4 shards, you're saving $70/month. With 8 shards, $140/month.

Not a fortune on its own. But add developer wait time on top of the CI cost, and the real savings become clear.

Trace handling. Streaming doesn't mean you lose traces. It means you decouple them from result visibility. The most common pattern is to upload traces only for failed or flaky tests, and only after the run completes, or on demand when someone actually needs to debug. Passing tests doesn’t need traces. Skipping them cuts upload volume by 80-95% for healthy suites.

For teams tracking Playwright reporting metrics, this separation also makes it easier to measure what actually matters: test health versus infrastructure overhead.|

When post-execution upload still makes sense

Streaming isn't right for every team. Here's when the traditional model works well.

Small suites. If you run fewer than 50 tests in under 5 minutes, the upload overhead is about 30 seconds. Adding a streaming reporter adds complexity with little benefit.

Local development. You watch the terminal and see results as tests run. Streaming to a remote dashboard adds no value when running tests on your laptop.

Air-gapped environments. If your CI system can’t make outbound HTTP calls during tests, then uploading data in a batch after the tests finish is your only choice. Some regulated industries need this kind of network isolation.

One-off runs. If you run tests weekly and only need a snapshot, the native Playwright HTML reporter works well. Open the file, scan the results, and move on.

The tipping point is usually around 200+ tests across 2+ CI shards with 10+ runs daily. Below that, overhead isn't painful. Above that, it impacts budgets and developer productivity.

Playwright real-time reporting changes what your CI can do

Streaming isn't just faster. It enables features impossible with post-execution upload since you only get data after the run ends.

Live abort on failure threshold. If 30% of your tests fail within 2 minutes, it signals a problem, maybe staging is down, or a deployment failed. Running the remaining 400 tests wastes 15 minutes of CI time.

Playwright real-time reporting detects failures as they occur and signals the CI to stop early. This saves CI minutes for meaningful runs. Post-execution can't do this because failure rates are unknown until the end.

Real-time rerun decisions. Identify failed tests mid-run and queue reruns without waiting for the full suite. Instead of "run all, wait 20 minutes, rerun failed, wait again," you get "stream results, detect failures immediately, rerun in parallel."

Some teams combine this with -last-failed to rerun only broken tests, halving retry cycle time.

Accurate CI cost attribution per test. Streaming sends timestamps with each result, providing exact per-test durations without post-processing. This reveals insights like "this spec file costs $46/month in CI time," helping managers prioritise optimizations.

Without streaming, you get only total run duration per shard, which is useful but too coarse for targeted CI cost optimization.

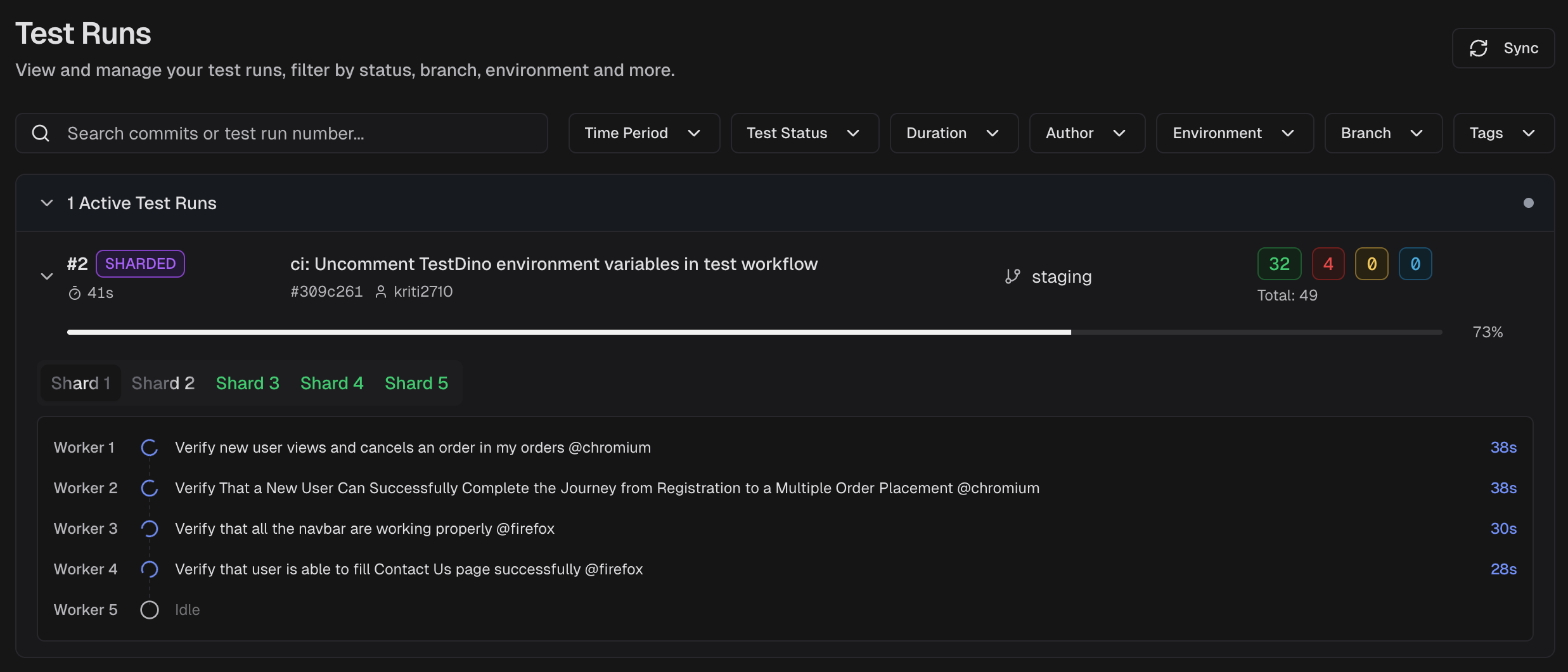

Live dashboards during releases. Engineering managers and release leads can watch test progress on a Playwright dashboard during deployments. No more posting "is CI done yet?" in Slack. No more refreshing the GitHub Actions UI. Results appear in real time, shard by shard, test by test.

This visibility matters during high-pressure release windows when the whole team is waiting on a green signal.

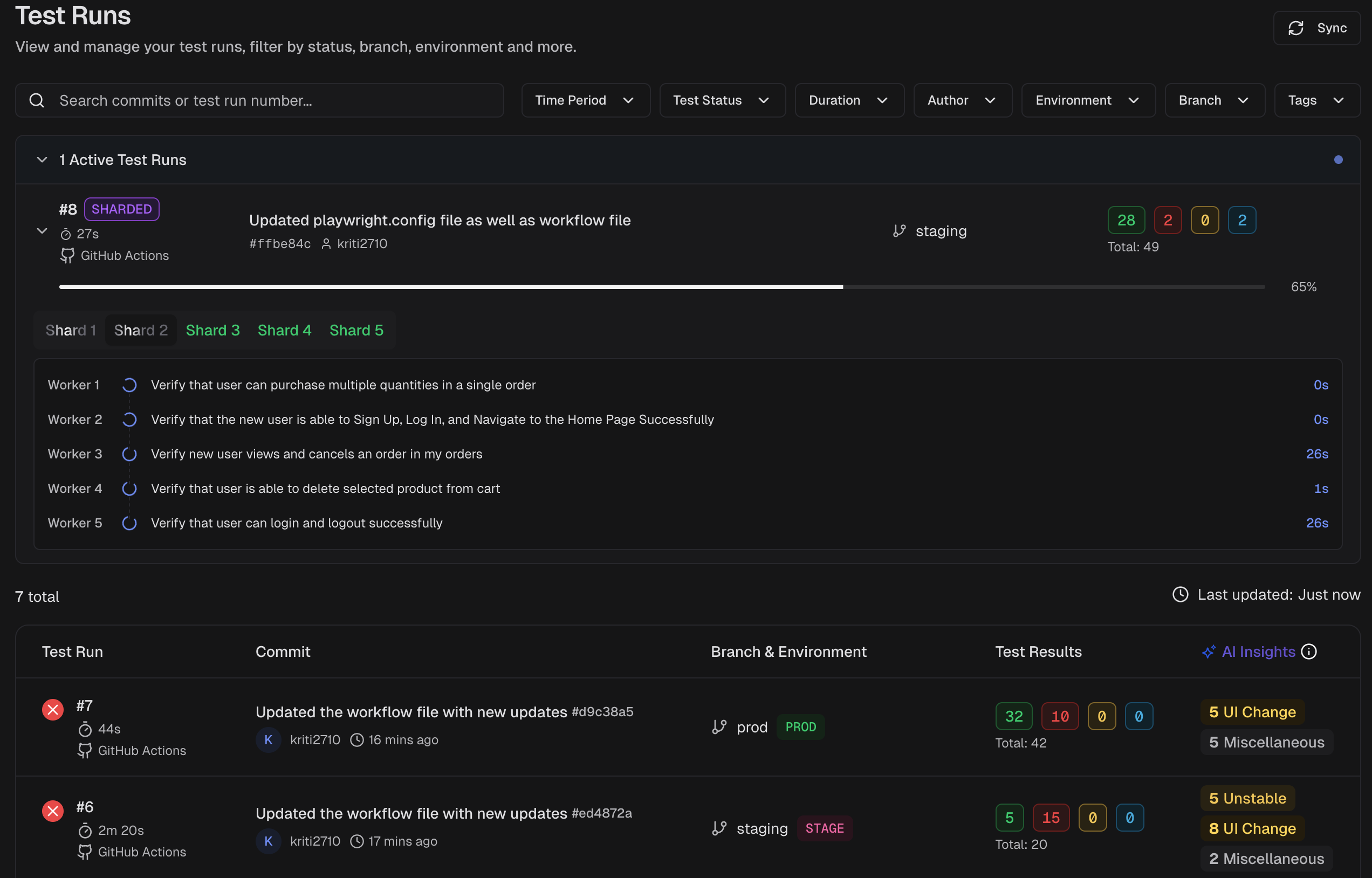

How TestDino handles Playwright real-time reporting

TestDino's Playwright reporter streams results as each test completes during CI execution. The reporter sends test metadata (status, duration, error, stack trace) over HTTP the moment a test finishes. There's no bulk upload step at the end. Your CI runners free up immediately after the last test.

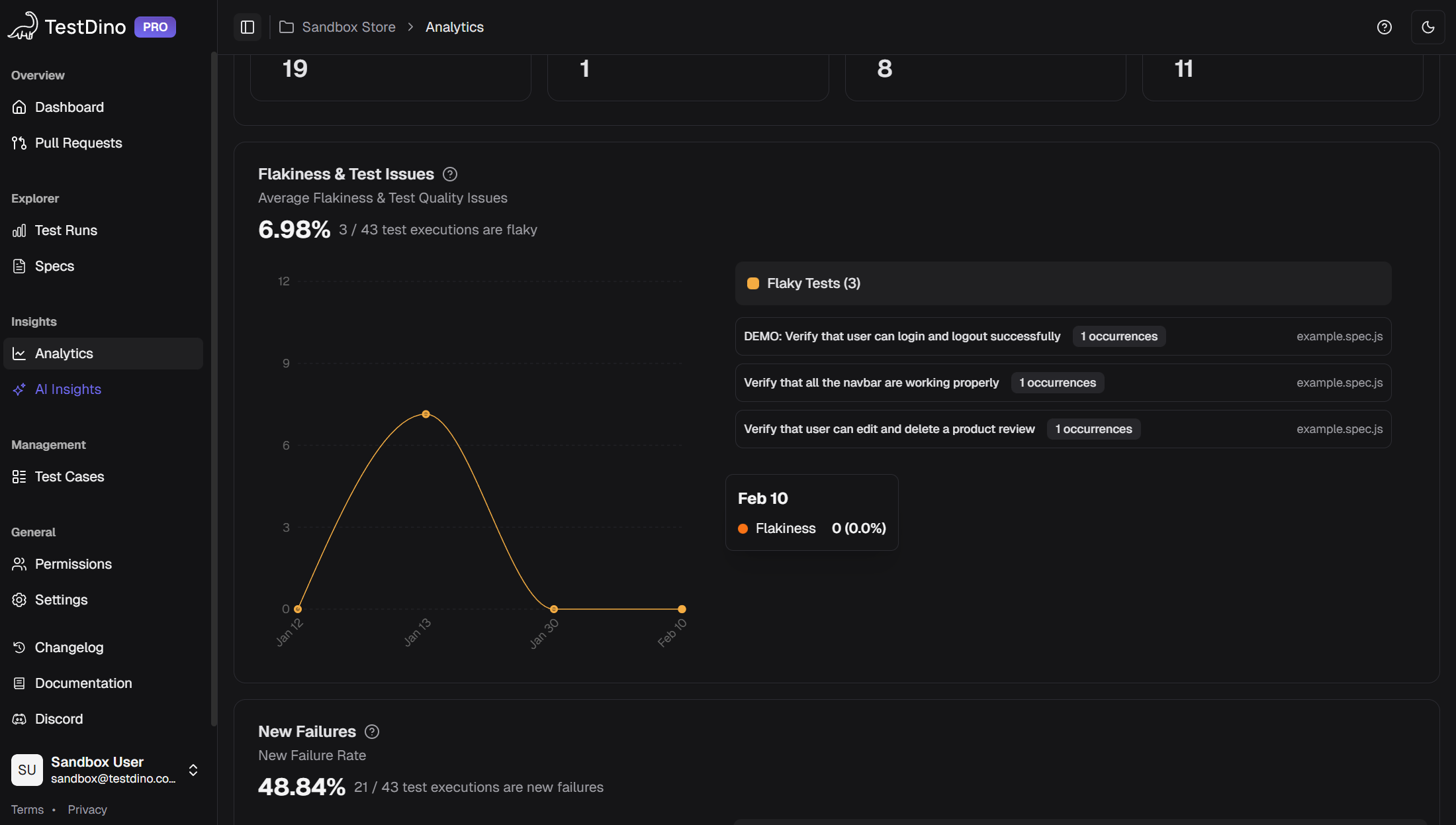

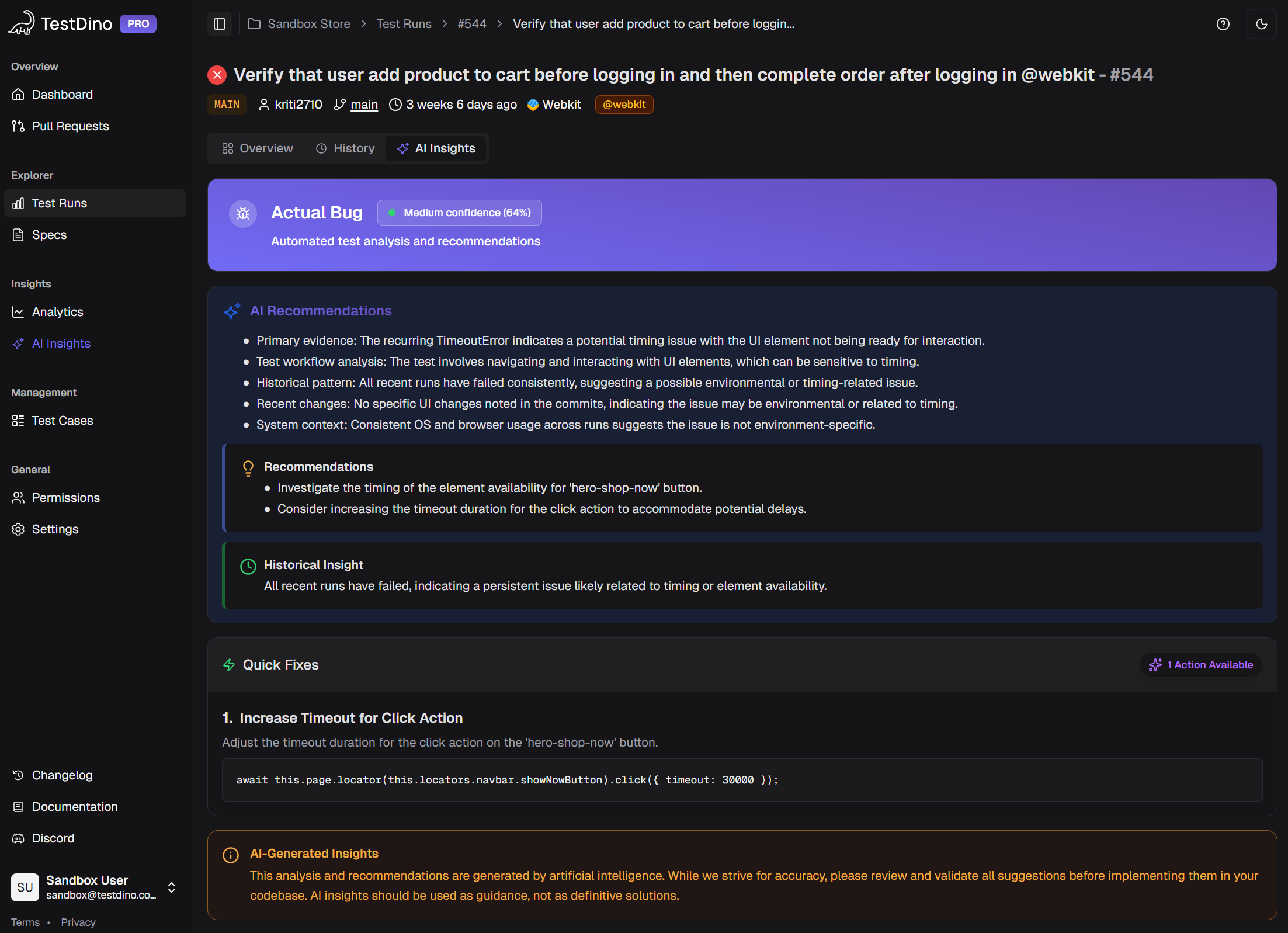

Results appear on the TestDino dashboard in real time. As results stream in, AI failure classification runs on each result, categorizing failures into bug, flaky, infrastructure, or UI change buckets with confidence scores. You don't wait for the full suite to get this analysis. It happens per test, as data arrives.

If CI crashes mid-run, every completed test result is already on TestDino. No data loss. No black-hole runs. This is particularly useful for teams running on spot instances or CI environments with aggressive timeouts.

Traces are handled separately. Teams configure async upload for failed tests only, which keeps the fast path clean. Passing tests skip trace upload entirely. For most suites, this reduces artifact volume by 90%+ compared to uploading everything.

GitHub PR comments and Slack notifications update live as results stream in. Developers see failure context and AI classification on their pull request before the full suite finishes. The test analytics data feeds into trend charts and flakiness tracking without any extra pipeline steps.

The setup takes about 5 minutes: add the reporter to your config, set two environment variables in CI, and remove the old upload-artifact step.

Migration path: moving from upload-based to streaming

Switching doesn't have to be all-or-nothing. Here's a practical migration path.

Step 1: Add the streaming reporter alongside your existing setup.

import { defineConfig } from '@playwright/test';

export default defineConfig({

reporter: [

['list'],

['html', { open: 'never' }], // Keep this during migration

['json', { outputFile: 'test-results/results.json' }], // Keep this too

['@testdino/playwright', {

token: process.env.TESTDINO_TOKEN,

}]

],

use: {

trace: 'on-first-retry',

},

});

Run both reporters in parallel for a week. Compare results on your dashboard with what the old flow produces. Build confidence before cutting over.

Step 2: Set environment variables in CI.

env:

TESTDINO_TOKEN: ${{ secrets.TESTDINO_TOKEN }}

Step 3: Remove or reduce the artifact upload step.

# Before: uploading everything

- name: Upload report artifacts

uses: actions/upload-artifact@v4

if: always()

with:

name: playwright-report-${{ strategy.job-index }}

path: |

playwright-report/

test-results/

retention-days: 7

# After: only upload traces for failures (optional backup)

- name: Upload traces (failures only)

uses: actions/upload-artifact@v4

if: failure()

with:

name: traces-${{ strategy.job-index }}

path: test-results/

retention-days: 3

Step 4: Configure trace handling. The recommended default is async upload for failed tests only. Passing tests skips traces entirely.

Step 5: Verify results appear in real time. Trigger a CI run and watch results appear on the dashboard as tests complete. If everything looks good, remove the old HTML/JSON reporters from your config.

The whole migration typically takes a day. You can keep the old reporters as backup until you're confident in the streaming setup.

For teams building a scalable Playwright framework, this change fits naturally into the CI optimization layer. It doesn't require changes to your test code, page objects, or fixtures. Just the reporter config and CI workflow.

Conclusion

Post-execution upload made sense when test suites were small, and CI was cheap. Those days are over for most Playwright teams. Suites grow to hundreds of tests, CI costs add up across shards, and developers waste time waiting for results they should have seen 15 minutes earlier.

Playwright real-time reporting fixes the bottleneck by separating lightweight test metadata from heavy trace files and sending results the moment each test finishes. Your CI jobs get shorter. Your failures get visible faster. And your data survives even when CI doesn't.

TestDino's streaming reporter gives you Playwright real-time reporting out of the box. Set it up in under 5 minutes and see your next CI run as it happens.

FAQs

No. Playwright supports custom reporters, but real-time streaming requires a third-party reporter that sends results during execution instead of after the run finishes.

In practice, no noticeable slowdown. Each test sends a small metadata payload. This is far cheaper than compressing and uploading large trace files after the run.

No. Traces are handled separately. Most teams upload traces only for failed tests or fetch them on demand, which reduces CI load without losing debug data.

With post-execution upload, you lose all results. With streaming, every completed test is already stored, so partial results are preserved.

Not much. If your suite runs under five minutes with few artifacts, the upload overhead is minimal, and streaming adds little value.

Yes. You can run streaming and HTML or JSON reporters together, compare outputs, and remove uploads only after you are confident.

Table of content

Flaky tests killing your velocity?

TestDino auto-detects flakiness, categorizes root causes, tracks patterns over time.