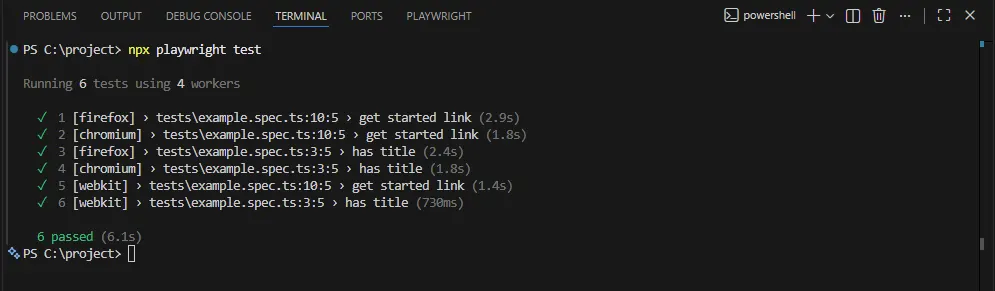

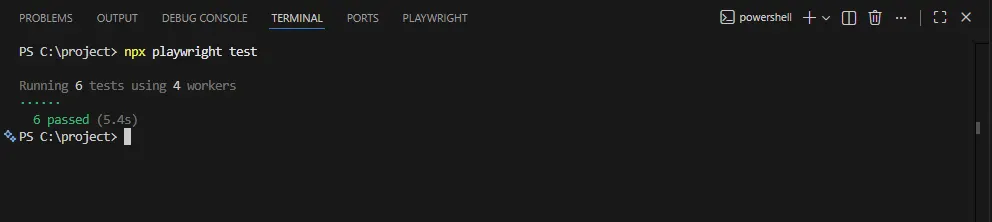

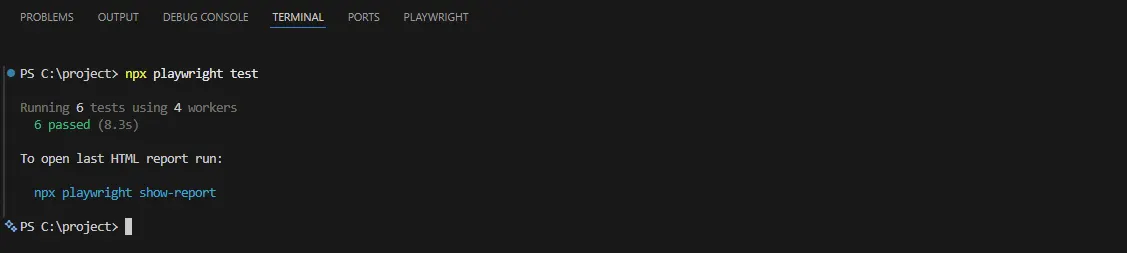

Software test reporting is the difference between knowing a test failed and understanding why it failed.

In modern engineering teams, end-to-end (E2E) test suites can execute thousands of assertions per run, across multiple browsers, environments, feature flags, and CI pipelines.

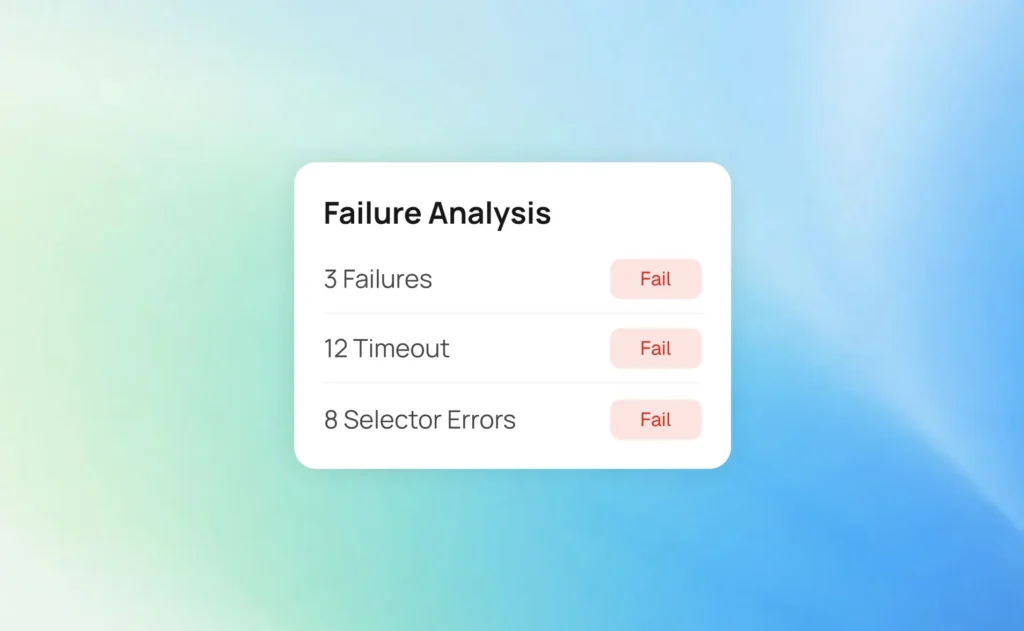

At scale, failures are inevitable, but without strong reporting, they turn into guesswork, slow root-cause analysis, and ultimately unreliable releases.

Industry data consistently shows that developers spend 20-30% of their CI debugging time just figuring out what went wrong, not fixing it.

Poor reporting increases mean time to resolution (MTTR), delays deployments, and erodes trust in test automation.

This guide explains Playwright's reporting capability from first principles through production-grade, CI-optimized setups used by high-performing engineering teams.