Playwright is built to be fast, but as test suites grow, many teams start running into slow tests in Playwright. The problem is usually not the framework, but how tests are structured and executed.

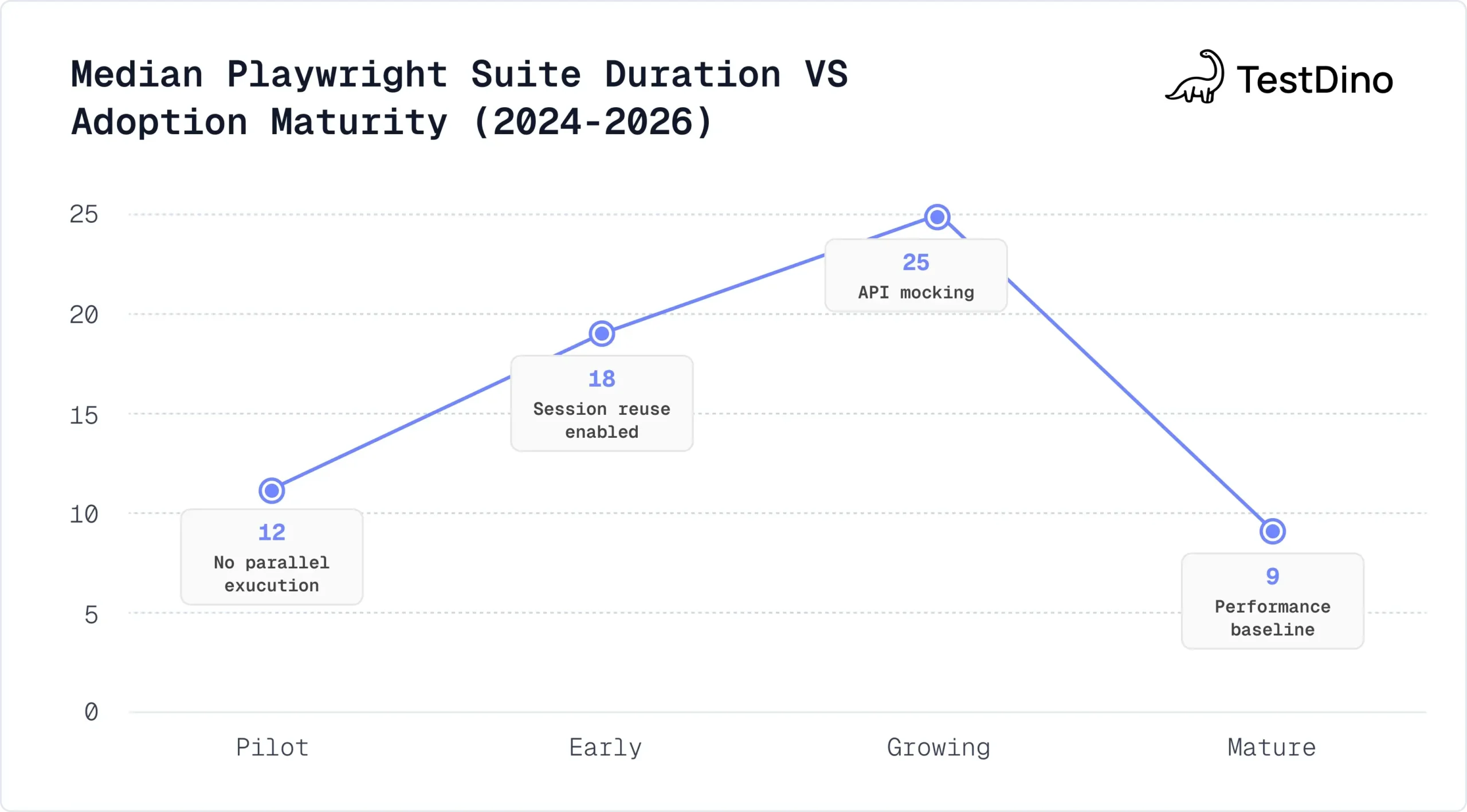

This blog brings together real data, practical code examples, and steps you can apply right away. Most slow suites come down to a small set of issues, and fixes like session reuse and proper parallel execution deliver the biggest gains.

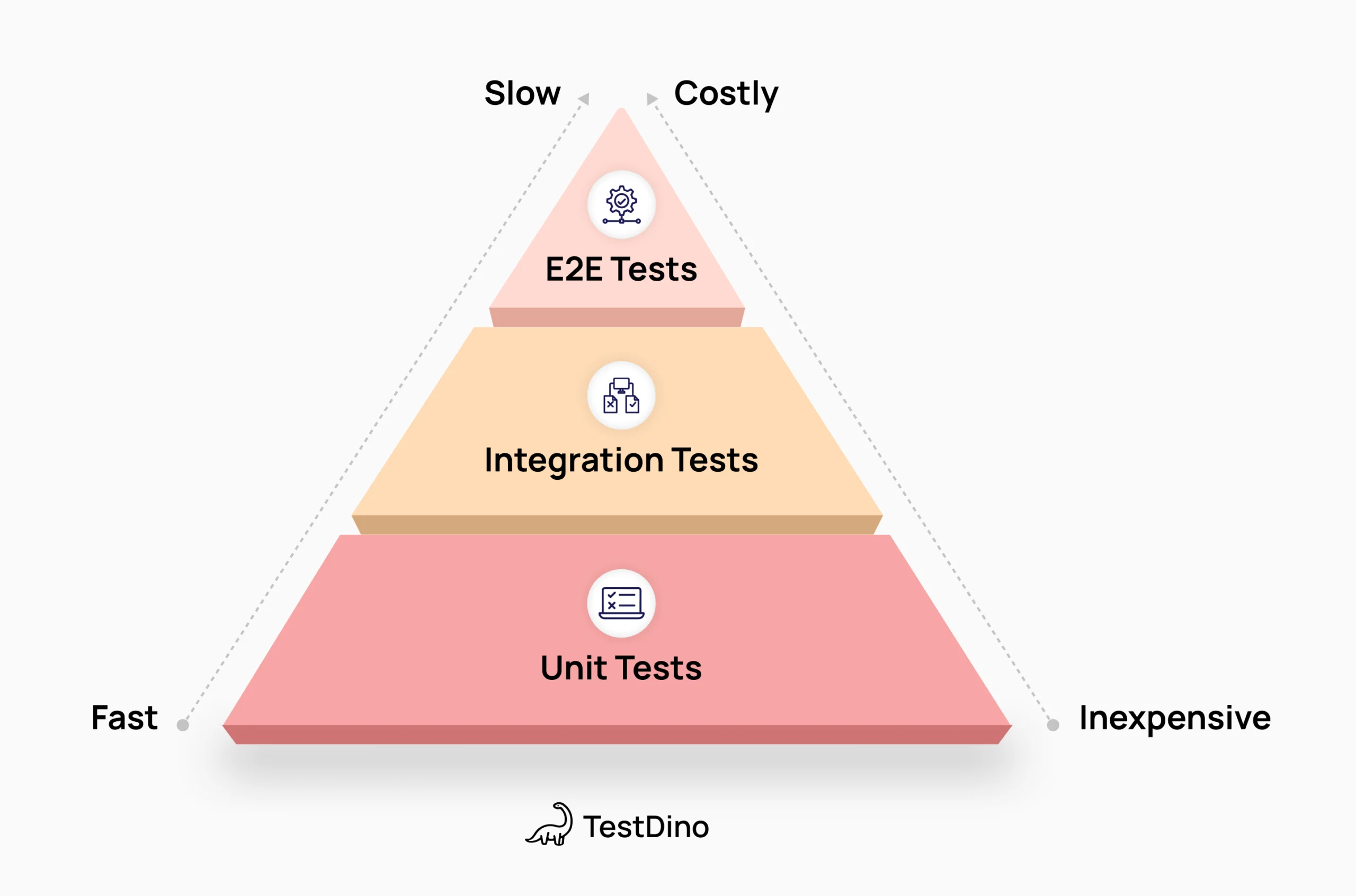

With the right focus, teams can keep E2E runs under 2–3 minutes, track performance with clear metrics, and cut total execution time by 50–70%.