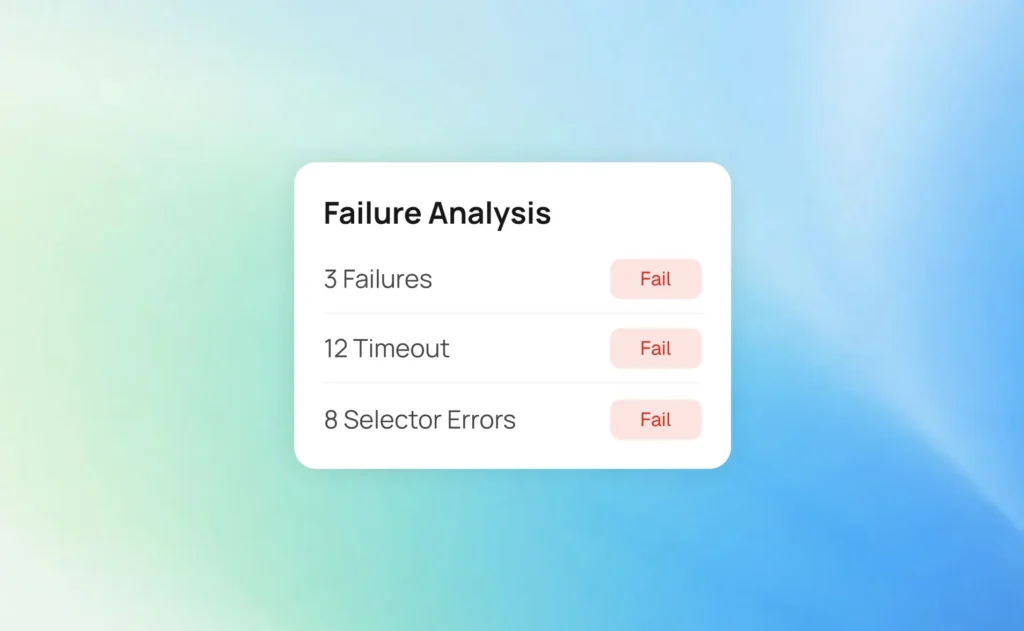

| Category | Meaning | Action Required |

|---|---|---|

| Bug | Consistent failure = product defect | Fix immediately |

| UI Change | Selector broke due to DOM changes | Update locators |

| Flaky | Passes on retry = unstable test | Stabilize or quarantine |

| Environment | CI setup or infrastructure issue | DevOps investigation |

The Playwright reporting gap: why test reports don’t scale

Most teams run Playwright tests but lack real visibility into failures. The reporting gap hides root causes and slows debugging. Here’s how to close that gap quickly and get actionable insights fast.

Pratik Patel

Dec 10, 2025

Playwright failures in CI can take excessive time to interpret.

Logs are long, traces require digging, and reruns produce inconsistent outcomes, making it difficult to confirm whether the issue is real or flaky.

The result is time spent diagnosing instead of resolving.

This is not a limitation of Playwright itself, but of test reporting and observability. A Playwright test report is a detailed document generated after running a set of automated tests using the Playwright testing framework.

Slack’s engineering team measured over 553 hours per quarter triaging failures, equivalent to more than one full-time engineer focused solely on interpreting incomplete signals.

The core Playwright reporting gap is simple: teams collect raw data, but lack actionable insight.

This slows deployment, increases rework, and hides the root cause behind unclear logs.

This blog focuses on:

- Why does default Playwright reporting consume excessive time

- The three analysis layers are commonly overlooked

- How to apply AI reporting to Playwright without changing your test stack

Why Default Playwright Test Reporting Slows You Down

Playwright is incredibly powerful, with features like tracing, auto-waiting, and parallel execution. But when it comes to reporting, the default setup often slows you down. It doesn’t give you deeper insights, historical patterns, or meaningful context about why tests fail.

That’s exactly where a test reporting platform like TestDino makes a difference. TestDino simplifies Playwright reporting by giving you clear dashboards, smarter insights, and workflows that help you understand failures faster. Instead of digging through logs, you get clean, structured analysis that’s easy to act on.

And now, TestDino goes even further with its own TestDino MCP (Model Context Protocol) server. This lets you interact with your Playwright test results directly through AI assistants like Claude and Cursor. You can analyze failures, review trends, upload local results, or get debugging insights all through simple natural-language prompts. No switching tools, no manual digging, just fast, AI-powered reporting.

However, speed in test execution is not the same as speed in development.

The Hidden Cost of "Simple" Test Failures

When a test fails, three things happen:

1. Context switching (15 minutes)

The developer stops current work to investigate.

2. Manual categorization (10-15 minutes)

Is this a bug? Flake? UI change? Environmental issue? Review the specific test case details, including execution traces and attachments, to troubleshoot the failure.

3. Documentation (5-10 minutes)

Create Jira ticket, update PR, notify team

Total: 30-40 minutes per failure.

For a team with 50 test failures per week at $100/hour:

- Weekly cost: $2,500 in lost productivity

- Annual cost: $130,000 just for triage

After Slack implemented automated flaky test detection, they reduced test job failures from 57% to under 5% saving 23 days of developer time per quarter.

- Calculate your cost: [Failures/week × 30 min × hourly rate × 52 weeks]

The 3 Missing Layers in Standard Playwright Reporting

Most teams rely on Playwright’s default reporters or basic CI output. These show what failed, but they are not fully dependable for explaining why the failure occurred or how it affects the release. To gain actionable insights, teams need reporting that goes deeper than a simple pass–fail list.

Here's what's missing:

1. Automated Failure Classification

Every test failure fits into one of four categories:

- Without classification: 20-30 minutes per failure to manually determine category

- With AI-powered classification: Instant categorization on every run.

Modern Playwright analytics tools like TestDino analyze:

- Error message patterns

- Historical pass/fail rates

- Retry behavior and timing

- Stack traces and DOM state

- Cross-environment performance

And as a result you know why it failed before you even open the logs.

When this test fails, advanced reporting platforms ingest the trace data and classify it automatically:

Result: Instead of manual detective work, you get instant classification with confidence scores.

2. Cross-Run Analytics (The Pattern Detector)

One failure gives you a clue. Multiple failures across branches give you a pattern.

TestDino collects run history across CI and highlights which tests are flaky, how often they fail, and under what conditions. The JSON reporter outputs test results in a machine-readable format, making it ideal for data analysis and integration with dashboards.

Key signals teams should look for:

- Failure clusters: When the same tests fail together, the root cause is usually shared.

- Branch differences: Passing on feature branches but failing on main often points to merge or dependency issues.

- Time patterns: Failures at specific times hint at infrastructure or scheduled jobs.

- Environment correlation: Passing on dev but failing on staging often means configuration problems.

More than 70% of flaky tests behave inconsistently right from the start. When analytics catch these patterns early, they never escalate into production issues.

Manual cross-run comparison is unrealistic. Automated analytics surface these insights in seconds.

3. Role-Specific Dashboards

Your QA lead, developers, and engineering managers need completely different views of the same test data.

Playwright Dashboards by Role

For QA Leads:

- Overall suite health and pass rate trends

- Top 10 flakiest tests ranked by impact

- Failure category breakdown (bugs vs. flakes vs. UI)

- Environment comparison (dev → staging → prod)

- QA teams play a crucial role in analyzing test results and improving testing processes within automated frameworks like Playwright.

For Developers:

- Only their PR’s test results

- Blocking failures that prevent merge

- Known flaky tests to safely ignore

- Direct links to traces and error logs

For Engineering Managers:

- Team velocity impact (hours lost to flakes)

- Test coverage gaps by feature area

- ROI of test automation investments

- Sprint-over-sprint quality trends

Showing a developer the whole test suite when they only need a clear “can I merge this?” adds too much unnecessary noise. Test reports should adapt to the needs of your team, project scale, and the level of detail required.

How to Implement Advanced Playwright Test Reporting

Playwright test reporting offers flexibility, allowing teams to generate custom reports in various formats and easily integrate external tools for enhanced reporting.

By leveraging third-party reporters, teams can add advanced features like detailed HTML reports, real-time monitoring, and interactive dashboards to further improve the reporting process.

Step-by-Step Implementation

Step 1: Configure Playwright for Maximum Data Collection

Step 2: Upload Artifacts in Your CI Pipeline

Step 3: Connect to a Reporting Platform

Modern platforms offer:

- One-line integration: Add a reporter or SDK, or create custom reporters in Playwright to tailor test reporting according to your specific needs

- Automatic AI classification: Analyze failures instantly

- Historical tracking: Cross-run analytics built-in

- Custom dashboards: Role-specific views

Test reporting tools in 2025 use execution histories to automatically detect anomalies, map failures to root causes, and identify environmental issues that humans miss.

Step 4: Integrate with Bug Tracking

Create a seamless feedback loop between your CI, AI failure analysis, and bug tracking system (e.g., Jira):

Workflow

1. Test fails in CI

2. AI classifies the failure

3. If classified as “Bug” → Automatically create a Jira ticket containing:

- Test details: name, suite, and file location

- Failure insights: Failure insights: category + confidence score

- Error context:message and stack trace

- Artifacts: screenshots and trace links

- Version info: Git commit and CI job reference

- History: previous occurrences or related issues

- Test result: outcome and summary from the Playwright report

- Description: Include a clear description of the test case and failure context in the bug report to help with faster triage and resolution.

4. Assign ticket to the relevant code owner

5. Developer receives full diagnostic context instantly

Impact

Time saved : Manual ticket creation (≈15 minutes) → Automated review (≈30 seconds)

Real Data: What Teams Actually Gain

Let’s talk numbers. Here’s what teams report after implementing comprehensive Playwright test reporting: Comprehensive reporting shows improvements in key metrics like test flakiness, debugging speed, and overall test health.

Effective test reporting ensures that test results are clear and actionable, leading to meaningful insights.

Time Savings

| Metric | Before | After | Improvement |

|---|---|---|---|

| Triage time per failure | 30 min | 6 min | 80% reduction |

| False escalations | 10/week | 1/week | 90% drop |

| Bug report creation | 15 min | 2 min | 87% faster |

Teams can also download detailed Playwright test reporting data, such as XML JUnit report files, for further analysis and record-keeping.

Quality Improvements

- Flaky test detection: Catch 70% on first run (before merging)

- Real bugs found:40% increase (reduced noise = better focus)

- Developer confidence: Teams trust CI results again

Business Impact

PR merge time: 50% faster (less investigation back-and-forth, with some test results reported inline for immediate visibility)

Release velocity: 2-3x more frequent deploys possible.

On-call burden: Reduced by catching issues pre-production.

Slack saved 553 hours per quarter through automated flaky test detection equivalent to 23 days of engineering time.

- ROI calculation: If triage costs $130K/year and a reporting platform costs $2K/month ($24K/year), you save $106K annually a 440% ROI.

Advanced Strategy: Detecting Playwright Flaky Tests Early

Flaky tests are the #1 reason developers stop trusting automation. They pass sometimes and fail other times without any code changes.

When using Playwright test reporting, many teams utilize the list report to display test results directly in the console, making it easier to spot inconsistencies.

The List Reporter outputs test results in a human-readable list format, showing the names of the tests along with their status, which is especially useful for debugging and CI pipelines.

Why Flakes Happen

Common culprits:

- Timing issues: Not waiting for async operations

- Shared state: Tests interfere with each other

- External dependencies: API calls, third-party services

- Non-deterministic data: Random values, timestamps

- Environment variability: Network speed, resource contention

The Modern Flaky Test Strategy

Don't just fix flakes. Prevent them from reaching production.

- Detect on first run: Configure automatic retries to catch flakiness before merging

- Quarantine known flakes: Let them run but don't block PRs

- Track flakiness rates: Which tests fail 10% vs. 50% of the time?

- Prioritize by impact: Fix high-traffic flakes first

Specialized Playwright analytics platforms use historical data to calculate 'flakiness scores' and automatically surface problematic tests.

Smart Integration of CI, Playwright Reporting, and Bug Tracking

TestDino connects directly with CI and your bug tracker to streamline triage. When a test fails, TestDino generates structured bug data automatically, removing manual copying and guesswork.

TestDino pre-fills each issue with:

- Test name, file, and line

- Failure category and confidence score

- Full evidence: error, stack trace, console

- Visual proof: screenshots, video, traces

- History across recent runs

- Direct links to code, commit, and CI job

The result is less context switching and faster fixes.

Conclusion

Playwright reporting should provide clarity, not guesswork.

When you add failure classification, cross-run analytics, and role-based dashboards, debugging becomes faster and more accurate.

CI already outputs the data you need.

TestDino helps turn that data into clear insight so you solve real issues instead of chasing noise.

FAQs

Treating all failures equally. Without classification, you waste time on flakes while real bugs sit unnoticed. Over 60% of failure time is triage, not fixing.

Table of content