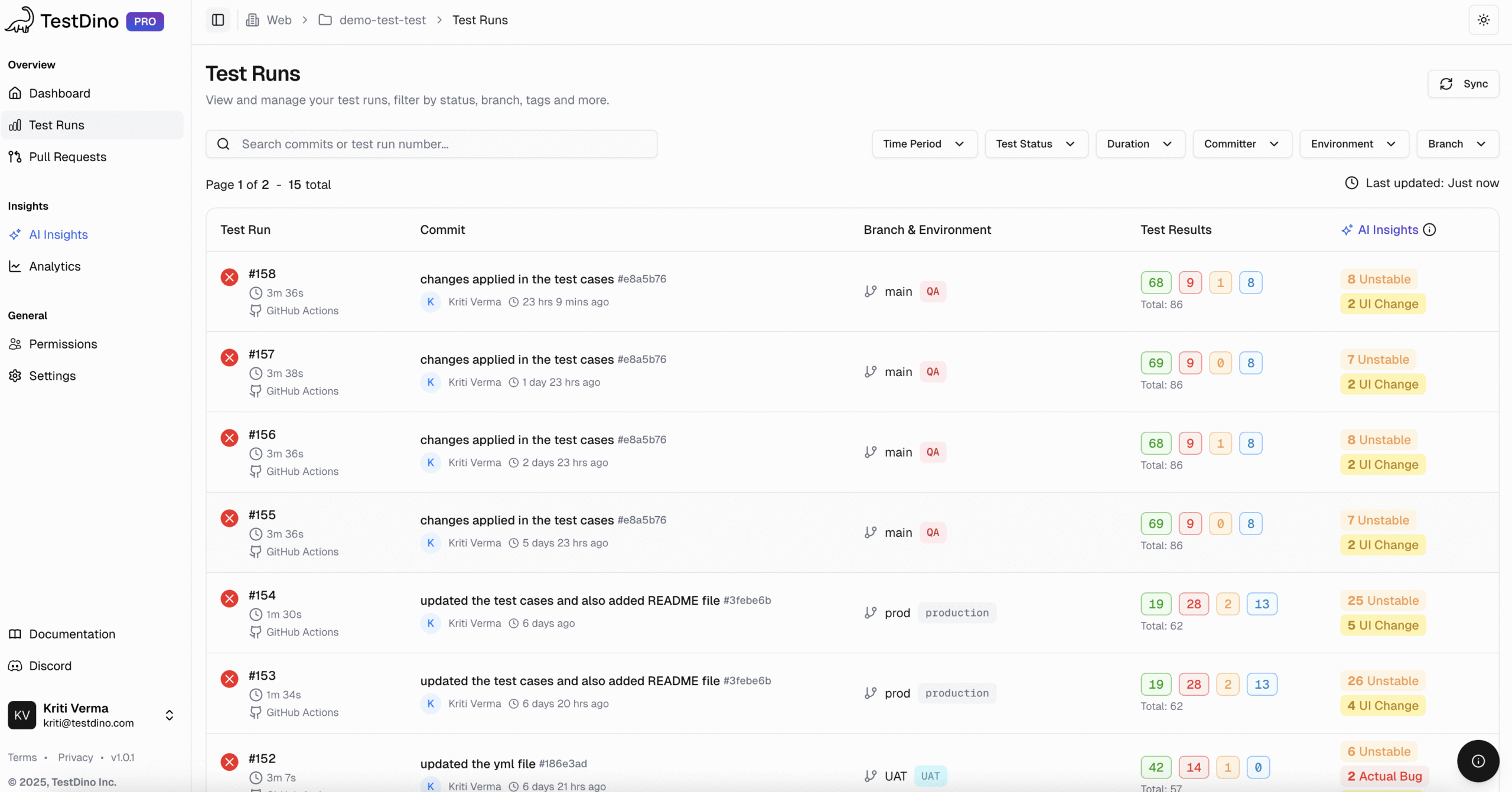

Together, these components form what’s known as an evidence bundle, the essential kit for decision-making.

When QA references these artifacts during release discussions, the conversation shifts from “I think this is fine” to “Here’s the data, generated through data-driven testing, that proves it.” This is the power of structured qa data analytics: clarity, confidence, and control.