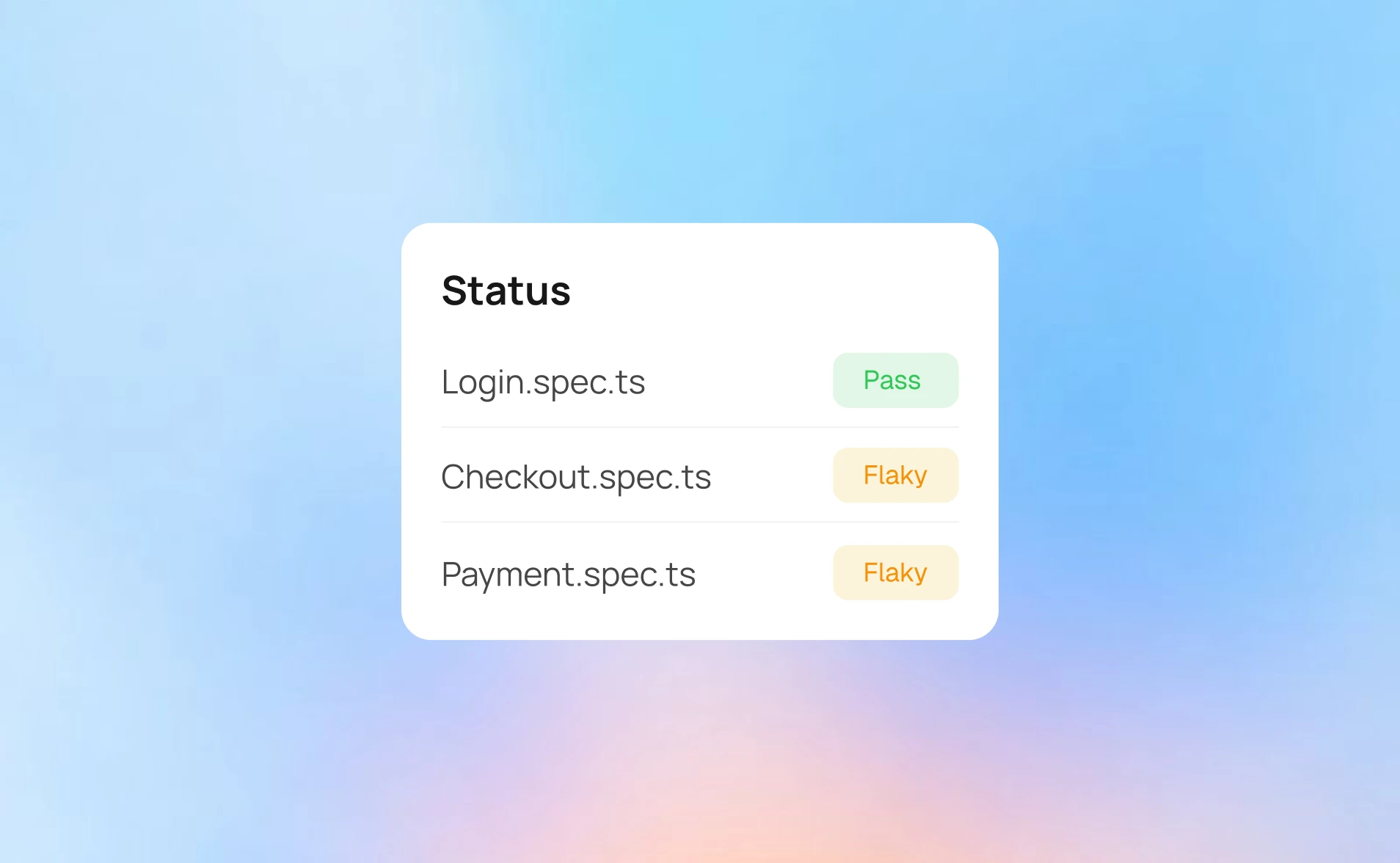

Flaky tests break trust and destroy test stability. One minute your CI fails, the next it passes, and your team loses hours chasing issues that aren’t real bugs.

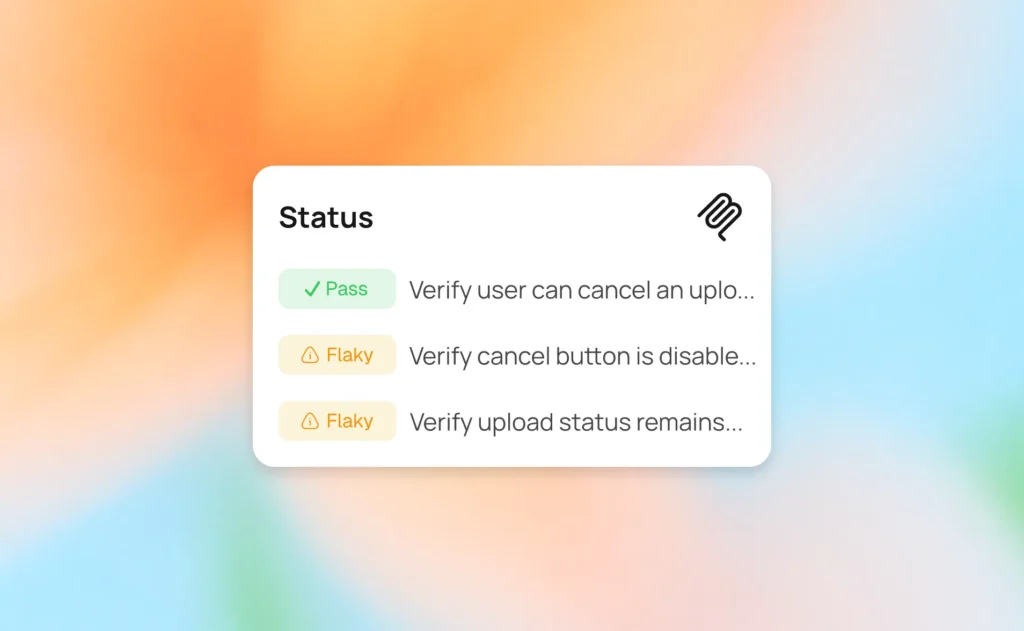

Retries only hide the instability. Real test stability comes from identifying root causes, timing issues, race conditions, and environment noise, not masking them.

This guide shows how to fix instability at the source with Playwright best practices and AI-powered insights, so your team moves from firefighting to consistent, reliable automation.