Automating Visual Regression Checks with Playwright MCP

Playwright MCP improves visual regression testing by adding DOM and accessibility context to Playwright screenshots. It helps teams reduce false positives and catch real UI issues faster in CI.

Visual regression testing catches UI breaks before users see them.

When styles, components, or layouts shift frequently, automated visual checks become essential.

But traditional screenshot comparison creates issues.

Animations trigger false alarms, dynamic content causes flaky tests, and teams spend hours reviewing changes that don't matter.

Playwright MCP adds context-aware validation on top of standard Playwright visual testing.

Instead of trusting pixel comparisons alone, it evaluates what changed and whether that change actually matters.

This guide explains how Playwright MCP improves visual regression workflows in real projects.

What is Visual Regression Testing?

Visual regression testing verifies that the visual appearance of a web application remains consistent after code changes.

It focuses on detecting unintended UI changes that functional tests often miss.

In practice, visual regression testing works by capturing screenshots of pages or components and comparing them against approved baseline images. Any difference beyond a defined threshold is reported as a potential issue.

This approach helps teams catch layout shifts, styling issues, missing elements, and spacing problems early in the development cycle.

It is especially valuable for modern applications where CSS, responsive layouts, and frequent UI updates are common.

Visual regression testing does not replace functional testing.

Instead, it complements it by ensuring that the user interface looks the way it is supposed to after every release.

Why Visual Regression Matters?

Visual changes often reach users before functional issues, making early detection critical for product quality.

- Prevents unnoticed layout breaks caused by CSS, font, or spacing changes

- Protects user experience across releases by catching visual issues before production

- Reduces reliance on manual UI reviews during frequent deployments

- Helps to maintain visual consistency across browsers, viewports, and devices

- Detects UI regressions that functional tests cannot validate

- Supports faster feedback cycles for developing teams

Visual regression matters because it safeguards how users perceive and interact with the application, not just how it functions.

Introduction to Playwright for Visual Testing

Limitations of Traditional Playwright Visual Regression

Traditional Playwright visual regression relies mainly on pixel comparison, which can introduce instability in real-world test environments.

- High false positives: Minor rendering differences trigger failures even when the user experience remains unchanged.

- Sensitive to animations: Transitions and loaders cause inconsistent screenshots across test runs.

- Dynamic content noise: Timestamps, user data, and third-party widgets create unreliable differences.

- Environment-dependent rendering: Fonts, OS, GPU, and browser versions affect the screenshot output.

- Limited context awareness: Pixel differences do not explain what changed or why it matters.

- Manual triage overhead: Engineers must review and approve many non-critical visual failures.

These limitations make traditional visual regression harder to scale without additional context and intelligence.

What is MCP in Playwright Visual Testing?

Playwright MCP refers to using the Model Context Protocol with Playwright to add structured context and decision intelligence to automated testing workflows.

It allows test systems to understand page structure, accessibility data, runtime state, and code context instead of relying only on screenshots.

MCP powered AI helps to overcome the limitations of traditional visual regression by adding meaning to visual changes.

- Uses DOM and accessibility snapshots to explain why a visual change occurred

- Correlates visual diffs with related Playwright test steps and code updates

- Separates genuine UI regressions from dynamic or expected Playwright rendering changes

- Reduces false alarms caused by animations, loading states, and environment noise

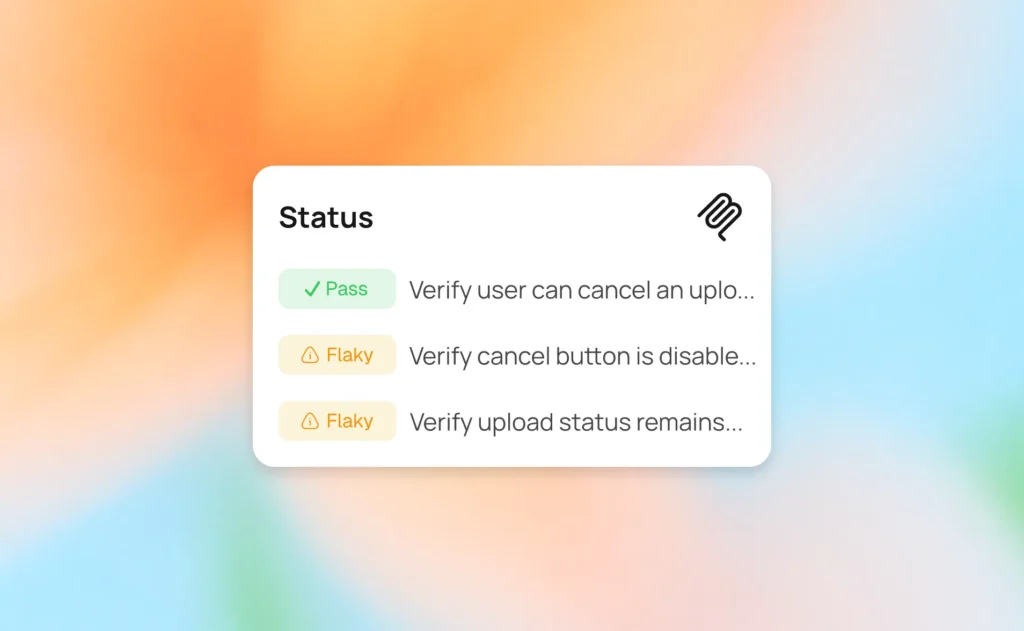

- Supports automated outcomes such as fail, accept, or review based on impact

This approach shifts visual regression from pixel comparison to context-aware UI validation.

How Playwright MCP Improves Visual Regression

Playwright MCP improves visual regression by combining visual signals with structured page context and runtime data.

Instead of judging changes only by pixel differences, it evaluates whether a visual change impacts usability or intent.

- Context-aware validation: Analyzes the DOM and accessibility structure alongside visual changes

- False positive reduction: Filters noise from dynamic content and animation timing

- Intent-based decisions: Matches visual changes with related code modifications

- Automated triage logic: Classifies results as failure, acceptable change, or review required

This results in more stable visual tests, fewer false positives, and faster, more confident release decisions.

Playwright MCP Visual Regression Workflow

This workflow shows how Playwright MCP evaluates visual changes from test execution to the final decision.

It follows a clear sequence that can be applied without complex configuration.

- Run Playwright test to capture screenshots during stable page states

- Compare against baselines to detect visual differences at the pixel level

- Collect page context using DOM, accessibility, and runtime data

- Analyze code changes related to affected UI components

- Classify the result as regression, acceptable change, or manual review

This structured flow delivers faster, more reliable visual regression decisions.

Creating Visual Baselines with Playwright MCP

Visual baselines serve as the reference images that future test runs will compare against.

Creating accurate baselines is critical because they define what the expected UI should look like.

Generate initial baselines:

Run your tests with the update snapshots flag to create baseline images:

npx playwright test --update-snapshots

This command captures screenshots and stores them in the configured snapshot directory.

Write a test that creates baselines:

import { test, expect } from '@playwright/test';

test('create baseline for homepage', async ({ page }) => {

await page.goto('https://testdino.com/');

// Wait for page to be fully loaded

await page.waitForLoadState('networkidle');

// Capture baseline screenshot

await expect(page).toHaveScreenshot('homepage.png');

});

Running Visual Regression Tests

Running visual regression tests with Playwright MCP follows the standard Playwright test execution process with additional context collection.

Execute tests locally:

npx playwright test

This command runs all tests and compares screenshots against baselines. Any visual differences trigger the MCP analysis workflow.

Run specific visual tests:

npx playwright test tests/visual/ --grep "visual"

Sample visual regression test:

import { test, expect } from '@playwright/test';

test.describe('TestDino Visual Regression', () => {

test('login page appearance', async ({ page }) => {

await page.goto('https://app.testdino.com/login');

await page.waitForLoadState('networkidle');

await expect(page).toHaveScreenshot('login-page.png', {

maxDiffPixels: 100,

});

});

});

Review test results:

After execution, Playwright generates an HTML report showing visual differences:

npx playwright show-report

Without MCP: Any change in the login subtitle text triggers a failure and manual screenshot review.

With MCP: The same change is classified as an acceptable content update because the DOM role, layout, and accessibility structure remain intact

How Playwright MCP Analyzes Visual Differences?

MCP analyzes visual differences by combining pixel-level changes with structural page context to determine if changes are meaningful.

This analysis happens automatically after Playwright detects visual differences during screenshot comparison.

The analysis process:

When a visual difference is detected, MCP performs the following evaluation:

- Pixel difference calculation: Measures the percentage and location of changed pixels

- DOM structure comparison: Analyzes if HTML elements were added, removed, or repositioned

- Accessibility tree analysis: Checks if semantic meaning or accessibility properties changed

- Code correlation: Maps visual changes to recent code modifications in stylesheets or components

- Classification decision: Categorizes the change as regression, acceptable, or needs review

Context signals MCP evaluates:

- Structural changes: New elements, removed elements, or layout shifts in the DOM

- Style changes: CSS property modifications that caused the visual difference

- Content changes: Text updates, image swaps, or dynamic data variations

- Animation artifacts: Timing-based differences from transitions or loading states

- Environmental factors: Font rendering, browser differences, or resolution variations

Handling Dynamic Content and Animations

Dynamic content and animations are common sources of false positives in visual regression testing. Playwright MCP provides strategies to handle these elements without compromising test reliability.

Hiding dynamic elements:

Use CSS to hide elements that change on every page load:

test('homepage without dynamic content', async ({ page }) => {

await page.goto('/');

// Hide timestamps, user-specific data, ads

await page.addStyleTag({

content: `

.timestamp,

.current-user,

.advertisement,

.live-chat {

visibility: hidden !important;

}

`

});

await expect(page).toHaveScreenshot();

});

This keeps baselines clean, so MCP-powered analysis focuses on real layout and component changes, not timestamps or user-specific noise.

Waiting for animations to complete:

Ensure animations finish before capturing screenshots:

test('modal with animation', async ({ page }) => {

await page.goto('/');

await page.click('#open-modal');

// Wait for animation to complete

await page.waitForTimeout(500); // Animation duration

// Or wait for CSS transition to end

await page.waitForFunction(() => {

const modal = document.querySelector('.modal');

return window.getComputedStyle(modal).opacity === '1';

});

await expect(page).toHaveScreenshot('modal-open.png');

});

By stabilizing the frame before capture, MCP can reliably tell the difference between animation artifacts and true UI regressions.

Using mask regions:

Ignore specific areas of the screenshot:

test('page with dynamic region', async ({ page }) => {

await page.goto('/');

await expect(page).toHaveScreenshot({

mask: [page.locator('.dynamic-widget')],

});

});

Masked regions tell MCP and its AI analysis which areas to ignore, while still evaluating structure, accessibility, and layout across the rest of the page.

Common Issues and Troubleshooting Tips

Even AI with Playwright MCP-created visual regression tests can face stability challenges that require practical solutions.

The following table addresses the most common issues teams encounter when implementing Playwright MCP visual regression testing.

| Issue | Fix |

|---|---|

| Font rendering differs across operating systems, causing false positives | Use Docker containers with identical font libraries to ensure consistency across environments. |

| Screenshots captured before the page fully loads create flaky tests | Add waitForLoadState('networkidle') and ensure fonts are fully loaded before taking the snapshot. |

| Baseline images consume excessive repository storage over time | Configure Git LFS (Large File Storage) for screenshots and implement appropriate image compression. |

| MCP server fails to start or loses connection during execution | Verify port availability and implement retry logic within the test setup phase. |

| Minor CSS changes trigger failures with high diff percentages | Adjust maxDiffPixels and threshold sensitivity values in your Playwright configuration. |

| Tests pass locally but consistently fail in the CI pipeline | Match browser versions and viewports exactly; generate baseline images within the CI environment rather than locally. |

These solutions address the most frequent pain points and help maintain stable visual regression test suites.

Best Practices for Playwright MCP Visual Regression

Following these best practices ensures your visual regression tests remain stable, maintainable, and deliver consistent value.

When Manual Review is Still Required

Automation significantly reduces visual noise, but it cannot fully replace human judgment in every scenario.

Certain visual changes depend on intent, context, or subjective design decisions that are difficult to infer programmatically.

Manual review is still required when:

- Intentional UI redesigns occur

Layout restructures, spacing changes, or new visual hierarchies must be consciously approved as the new expected state. - Content-driven updates are frequent

Marketing banners, promotional sections, and seasonal visuals change by design and require contextual validation. - Cross-browser rendering varies subtly

Font smoothing, anti-aliasing, or subpixel differences may look acceptable but need human confirmation. - Design systems evolve

Component-level refinements often impact multiple screens and benefit from a holistic visual review.

Manual review complements automation by validating visual intent, not just visual difference.

Conclusion

Visual regression testing keeps your app looking right, not just working right.

Playwright handles the heavy lifting, but pixel-by-pixel comparisons create a lot of noise. When you layer in DOM changes, accessibility data, and code context alongside visual diffs, suddenly the picture becomes clearer.

You see what actually broke and why it matters.

This cuts down false alarms.

Your team stops chasing false issues and focuses on real UI problems instead.

TestDino takes this further by centralizing your Playwright test runs and using AI to classify failures automatically.

It connects test results to your PRs and commits, so your visual review workflow stays tight and organized.

FAQs

Applications with frequent UI updates, complex layouts, or shared design systems benefit most because visual drift is harder to catch with functional tests.

Table of content

Have a questions or

want a demo?

We’re here to help! Click the button

below and we’ll be in touch.